Concurrency Control in Nested For Each Loop in Azure Logic Apps (Consumption)

Prerequisites to reproduce the test cases access to an Azure subscription:

- Access to an Azure subscription.

- Basic knowledge of Azure Logic Apps.

- A SFTP server.

- Salesforce Professional Edition or higher license (The trial doesn't work due to the unavailability of the API Access feature) (1).

Audience

The audience of this are Azure Logic Apps Developers and Integration Architects, who are actively using Azure Logic Apps for workflow automation and integration tasks would find this article highly relevant. It provides insights into handling nested for each loop efficiently and overcoming limitations in parallel execution in nested for each loop.

Context

Azure Logic Apps provide a powerful workflow automation platform that allows you to create, schedule, and manage workflows for integrating various services and applications. One common scenario in workflow automation is the need to iterate over nested arrays or collections, commonly achieved using nested for each loop.

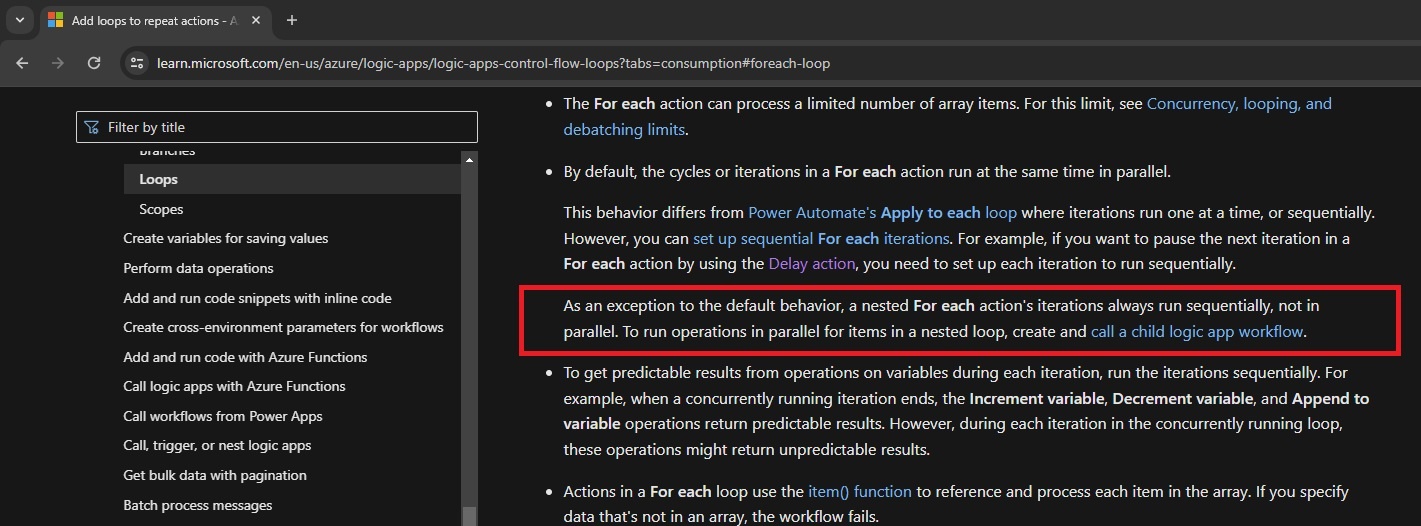

However, when it comes to parallel execution, a nested for each action's iterations always run sequentially, not in parallel. (2)

This can cause bottlenecks during execution, especially when part of the workflow involves data enrichment, validations and/or exception handling.

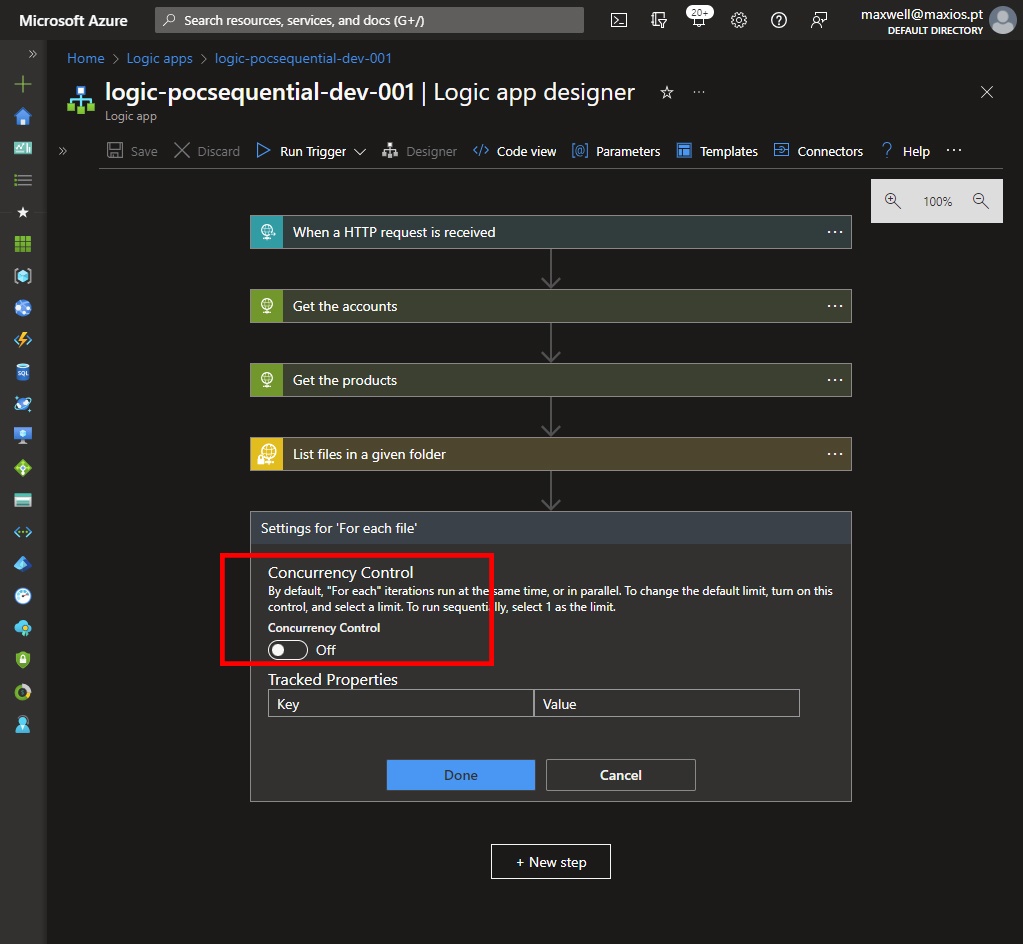

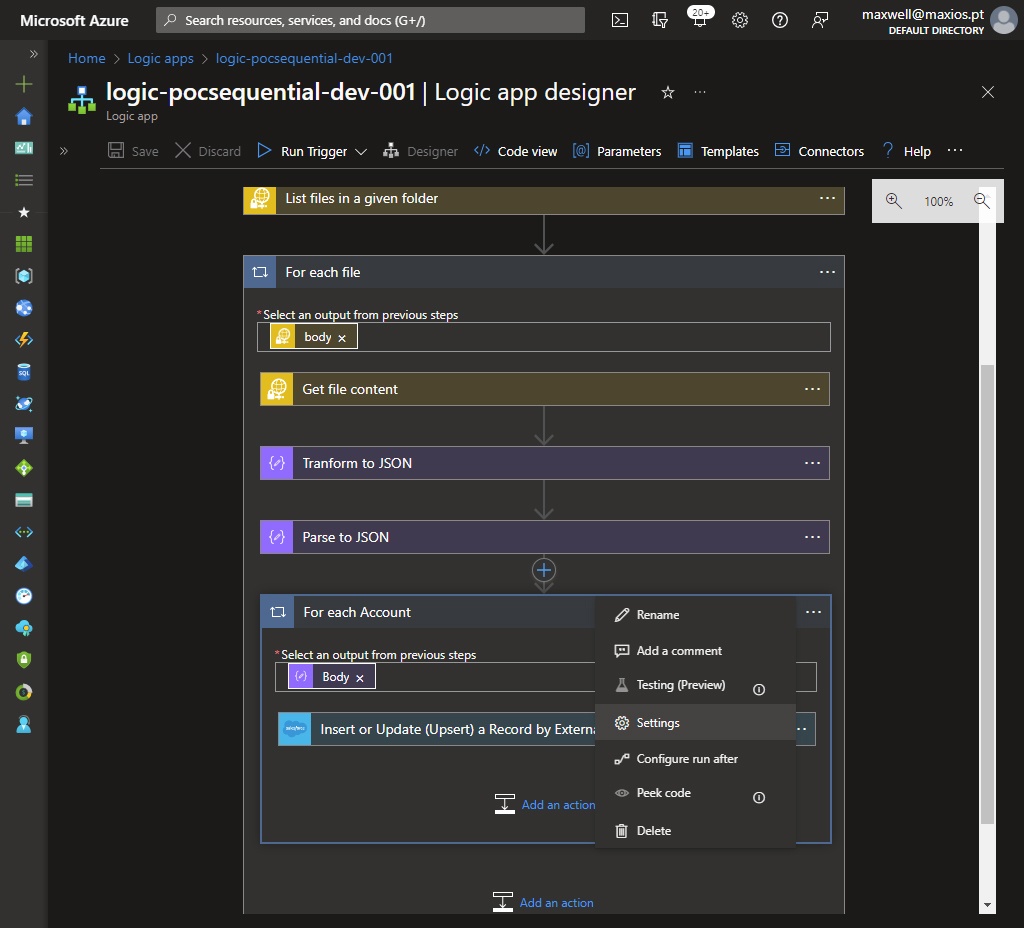

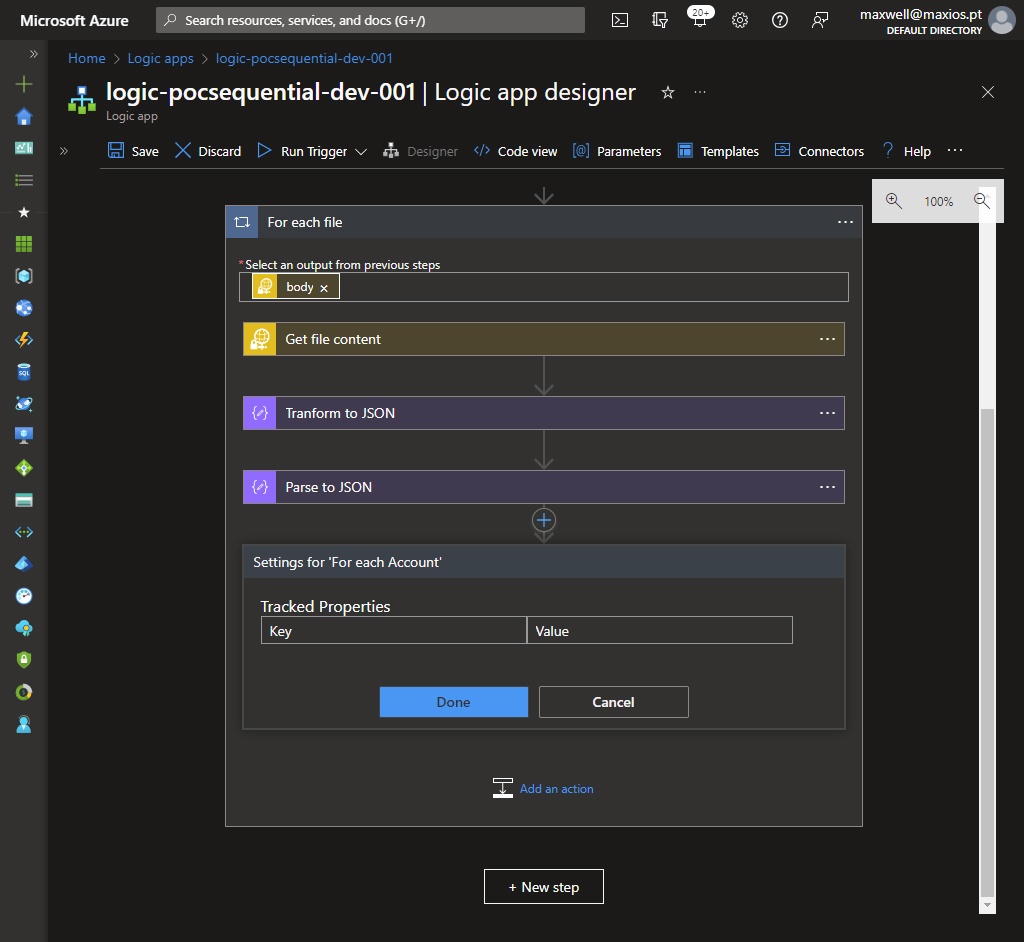

The following images highlight that the "Concurrency Control" feature is only available at the parent's level.

The idea to overcome this limitation is to break down the solution into two logic apps if you are using Logic App (Consumption).

Proof of concept scenario

Let's suppose that an on-premises system produces batches of XML files containing information regarding its client portfolio, which need to be ingested by a logic app, undergo data enrichment procedures, and then be digested into Salesforce.

However, the information contained in these files is not enough to be persisted into Salesforce. Therefore, some additional requests are needed to enrich the data before submitting the request to Salesforce.

- Get the accounts details external endpoint.

- Get the products details from another external endpoint.

Apart from data enrichment, in a more robust and enterprise-oriented solution, you may need to decouple some tasks into separate workflows, or perhaps implement an error handling approach using the scope component to perform the following tasks:

- Compose a well formatted log error as blob file, or trigger an e-mail to the support team.

- Revert or rollback previous requests to ensure data consistency.

- Call a custom action in case of unexpected errors.

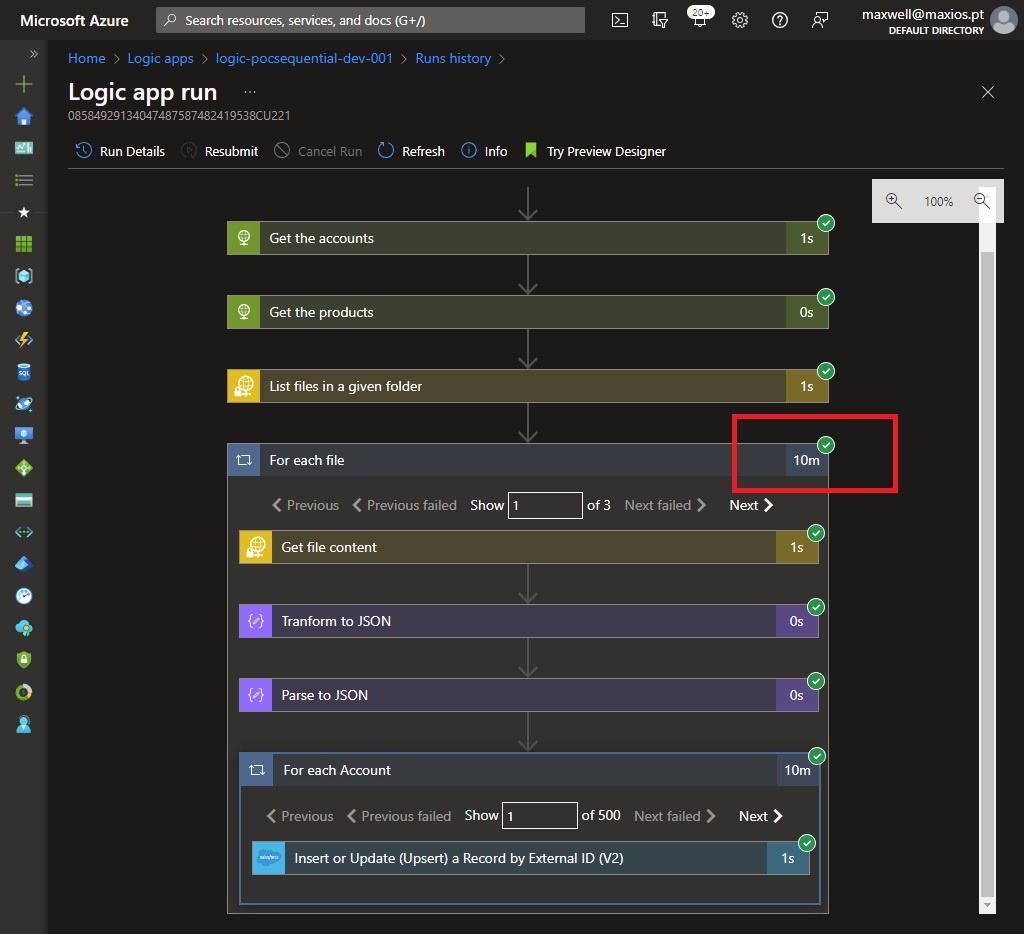

Outcome of test case running in nested for each loop in a single logic app

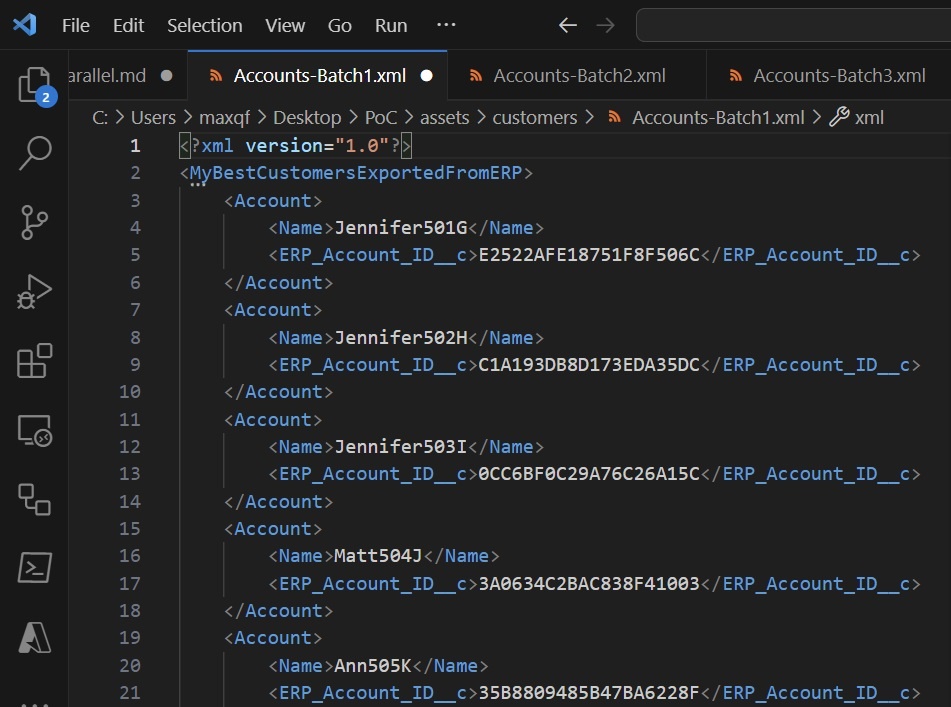

For the test cases, three XML files were provided, each containing 500 nodes (Account), and they follow the schema outlined below.

A Logic App (Consumption) named 'logic-pocsequential-dev-001' was created for the first test case scenario (sequential).

Taking into account the throttling limits of 900 calls and a renewal period of 60 seconds imposed by Salesforce (3), the total execution concludes in approximately 10 minutes.

Outcome of test case running in nested for each loop in two separate logic apps (parallel approach)

All the accounts created in the previous test case were removed from Salesforce.

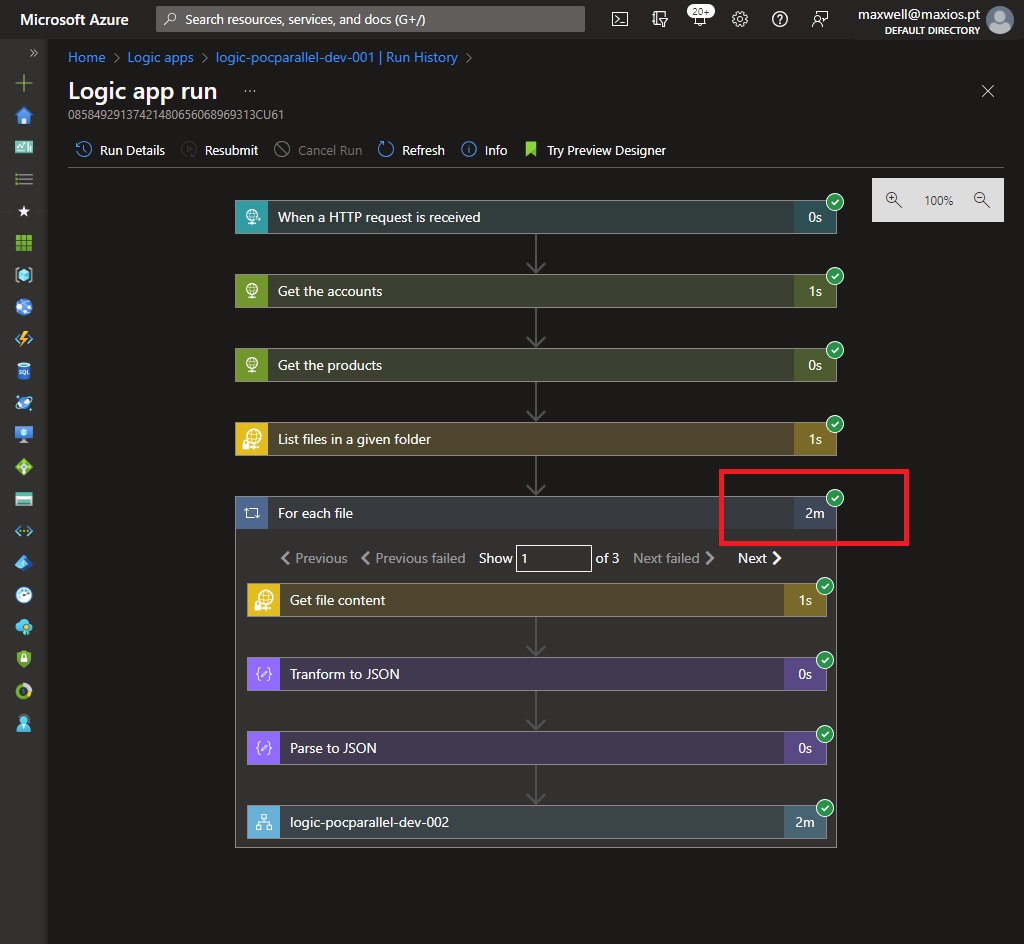

Two Logic Apps (Consumption) instances were created to achieve this goal (logic-pocparallel-dev-001 and -002). The 3 XML files were provided for this test case.

The Salesforce upsert logic was moved to the instance "-002", then instead of processing in the parent for each loop, the array of all the accounts in the current file is being passed into the body request of the logic app instance "-002".

Following this approach, total execution concluded in around 2 minutes, for the same 1500 records.

Conclusion

For this particular scenario, developers might consider merging the XML files before processing the accounts to avoid the nested for each. While this may be a fair option when dealing with a small set of files, it can become a bit more complex when you need to log and report failing accounts or nodes from each XML file. Alternatively, developers might opt to switch to the SFTP trigger "When a file is added or updated" and take advantage of the "splitOn" feature if precise batch file processing times are not required.

However, the goal of this PoC is to emphasize the importance of parallel processing and decoupled logic in workflow implementation. These practices are considered best practices in system design due to their ability to enhance performance, scalability, and maintainability. Parallel processing allows tasks to be executed simultaneously, reducing processing time and improving efficiency. Decoupled logic breaks down complex systems into independent modules, promoting modularity and ease of maintenance. Together, they enable systems to handle increasing workloads, adapt to change, and maintain high levels of reliability.

by Maxwell Francisco, Azure Developer at Luza