Copilot and Data Security: Setting Boundaries for AI in the Workplace

Microsoft Copilot is quickly becoming an indispensable part of the modern digital workplace. By integrating AI into tools like Word, Excel, Teams, and Outlook, Copilot helps streamline communication, automate repetitive tasks, and unlock productivity like never before. But with great power comes great responsibility—and when it comes to data security, Copilot is not your security guard.

Too often, organizations rush to enable AI tools without implementing adequate safeguards. But unlike a human assistant who knows what’s off-limits, Copilot will act on the data and permissions it’s given—unless you explicitly restrict it. Without proper governance, this opens the door to unauthorized data exposure, compliance violations, and insider threats.

The good news? You can enable Copilot securely—if you take a layered, server-side approach. Here’s how to keep your AI assistant smart, but safe.

1. Microsoft Purview: Classify and Protect Your Data

Think of Microsoft Purview as your content firewall. Before worrying about who’s accessing what, make sure your data is properly labeled and governed.

Sensitivity Labels:

Use Purview to apply Sensitivity Labels that tag content based on its confidentiality level—Public, Internal, Confidential, or Highly Confidential.

- Automatically apply labels based on content scanning (e.g. detecting financial data, personal identifiers, or legal terms).

- Encrypt documents or emails with policies that restrict access—even within your organization.

- Prevent Copilot from interacting with or pulling data from "Highly Confidential" documents.

Data Loss Prevention (DLP):

DLP policies add real-time enforcement. For example, you can:

- Block Copilot responses that might contain protected info.

- Warn users before sensitive data is shared.

- Log and report any potential policy violations for review.

Best Practice: Combine auto-labeling with DLP to ensure sensitive data never reaches Copilot without scrutiny.

2. Azure AD & Microsoft Graph: Restrict What Copilot Can See

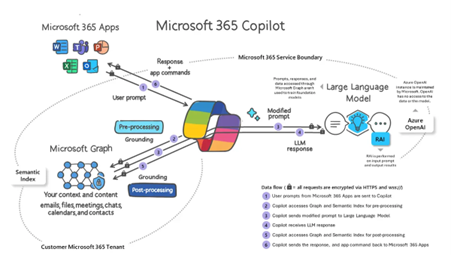

Copilot leverages Microsoft Graph to fetch data from services like Outlook, Teams, OneDrive, and SharePoint. That means it inherits the permissions of the user—and operates within the constraints you define in Azure AD.

Role-Based Access Control (RBAC):

Set up clear roles to prevent over-permissioned accounts. For example:

- A marketing user shouldn’t be able to access HR documents via Copilot.

- Executives may need broader access—but that should come with tighter DLP policies.

Conditional Access:

Add context-aware controls:

- Block Copilot usage on unmanaged or personal devices.

- Require MFA before accessing sensitive data.

- Limit access by geographic location or session risk.

Remember: It’s not just about who can use Copilot, but what Copilot can use on their behalf.

3. Defender for Cloud Apps: Watch the Behavior, Not Just the Role

Even with strong access policies, you need to monitor for abnormal usage patterns. Defender for Cloud Apps introduces a behavioral layer to detect risks in real-time.

Real-Time Session Control:

Control what Copilot can do in an active session. You can:

- Restrict download or copy-paste actions.

- Enforce read-only mode for certain document types.

- Prompt users for justification when accessing sensitive content.

Anomaly Detection:

Set up alerts for:

- A sudden spike in file access from Copilot.

- Unusual hours or locations of usage.

- Attempts to interact with high-risk content repositories.

Think of this as your AI watchtower—spotting threats before they escalate.

4. Entitlement Management: Least Privilege by Default

Access to Teams, SharePoint, and OneDrive is often granted liberally and reviewed rarely. Copilot surfaces and amplifies this sprawl.

What to Do:

- Use Microsoft Entra ID Entitlement Management to manage group and app access workflows.

- Run Access Reviews on a recurring schedule to audit who has access to what.

- Revoke unnecessary access—especially for external collaborators and dormant accounts.

Copilot doesn’t discriminate. If a user has access, so does the AI. Clean up before you light it up.

5. App Consent & Service Principals: Know What Copilot Is Authorized to Do

Behind the scenes, Copilot acts as a service principal—an identity that can be granted permissions in your environment. That means it could be integrated into apps, scripts, or third-party services without your direct knowledge.

Mitigate This Risk:

- Review Enterprise App permissions granted to Copilot and revoke anything unnecessary.

- Monitor consent logs to see what scopes have been authorized.

- Disable or scope down Copilot’s Graph API permissions if your use case is limited.

Trust, but verify. Service principals are invisible doors—make sure they’re not wide open.

⚠️ Highlight: If You Can See It, So Can Copilot

One of the most misunderstood aspects of Copilot is the belief that it "breaks into" data. It doesn’t. Copilot doesn’t bypass permissions—it simply surfaces content that a user already has access to.

That means:

- If a sensitive document is unintentionally shared with “Everyone” in your organization, Copilot can surface it.

- If a user is part of a SharePoint group with broad read access, Copilot will use that access.

Keep a Close Eye On:

- File and folder sharing settings, especially “Anyone with the link” or “Organization-wide” shares.

- Team and group memberships, which may grant broader access than intended.

- OneDrive personal file sharing, which can be easily overlooked.

Copilot doesn’t create new risks—it magnifies existing ones. Review what’s open before it becomes a prompt away from exposure.

Final Thoughts: Control Before Capability

Copilot is a game-changer—but without proactive security measures, it can be a gateway to data sprawl, compliance risks, and unintended exposure. AI doesn’t make decisions—it follows rules. Your job is to make sure those rules are solid.

Before you let Copilot roam your digital estate:

- Classify and protect your data.

- Review and limit access intelligently.

- Monitor behavior in real time.

- Audit permissions regularly.

Give Copilot the keys—but only after installing locks, setting alarms, and drawing a map of where it can go.

For more information on Data, Privacy, and Security for Microsoft 365 Copilot, please see the following link: Data, Privacy, and Security for Microsoft 365 Copilot | Microsoft Learn.

by Andreia Almeida Lopes, Modern Workplace Specialist at Luza